Installation

Where is the GleechiLib Prefab?

If you see the use of GleechiLib prefab in one of our videos, but can not find it in the SDK: the GleechiLib Prefab was removed in version 0_9_6. Prefabs “SensorAvatar” and “SensorAndReplayAvatars” are added since version 1_2_0 in Runtime\Resources\Prefabs\ to provide easy setup of MyVirtualGrasp with GleechiRig.

Hands and Controllers

I have setup the avatar but the hands don’t move.

Make sure you do not tick Replay option in MyVirtualgrasp → Avatars window, see Avatar Types to understand more.

Why does my hands not move smoothly, but rather choppy or laggy when using VG?

VG main loop runs in FixedUpdate rather than Update in order to synchronize VG powered hand object interaction with physics calculation in Unity. This can cause some visual inconsistency showed as non-smooth hand movement with/without holding an object.

We recommend you to resolve this by setting the Time.fixedDeltaTime to match the refresh rate of the device you are targetting (e.g. 1f / 72f to target 72 hz displays). You can set it on Start or Awake Time.fixedDeltaTime = 1f / refreshRate. Alternatively you can also set it manually in the editor at: Project Settings -> Time -> Fixed Timestep. In this field you can write for example 1/72 and hit enter, if 72 is the refresh rate you’re targetting.

Does VG support other headsets / controllers?

The VG SDK is hardware-agnostic and does not depend on a headset. Thus, the question of headset support goes more towards Unity. In terms of controllers, VG SDK provides a customizable controller abstraction (see VG_ExternalControllerManager) for a few stand-alone controllers and abstractions (such as UnityXR) which require their Unity SDKs. If your controller is not supported, you can either write a VG_ExternalController for it yourself, or contact us.

I am using my own hand models and the fingers bend strangely.

Each hand models maybe represented differently in terms of how all the bones are oriented. If you have a representation that is not aligned with the VG_HandProfile, you will experience wrists or finger bones bending into the wrong axis direction. You can resolve this problem by creating your own Hand Profile. Read more on using custom hand models.

Are there any concerns when using Oculus’s OVR Player Controller?

When you use VirtualGrasp, you should not put the hand rig under the OVRPlayerController / TrackingSpace. The background of this is that the hand rig is controlled under-the-hood by VG continuously, thus you do not need to put the hands into the TrackingSpace in order to move them.

When you put the avatar also under TrackingSpace, the hands will in addition be affected by the movement of that Transform (as a child of it) - which in the case of OVRPlayerController seems is some acceleration-based movement with damping. This movement induced by the PlayerController will then create a wiggeling behavior when moving the player while holding an object with VG.

I want to use use OVR Hand with VirtualGrasp, but my hands can not grasp any object, why?

You can not use OVR Hand (which can be found in the Oculus Integration under Oculus\VR\Scripts\Util\OVRHand.cs) together with VG controllers as they both are independently affecting the hand model. Since OVR hand controller is updating hand pose in Unity’s Update() loop, it will always overwrite VG’s hand controller which is updating in the FixedUpdate() loop, therefore showing the symptom of hands not able to grasp an object. Because VG needs to handle interaction with physical objects, we cannot move VG update to Update() loop to avoid this overwritting.

Compared to OVR Hand, VG’s sensor integration with Oculus finger tracking (through VG_EC_OculusHand) has equivalent hand control quality, because VG consumes the same Oculus finger tracking signal and integrates it into VG’s hand interaction engine.

The OculusIntegration sample scene provided by VirtualGrasp package shows the comparison of OVR hand controller with VG’s Oculus integration. Video below recorded this scene. The bottom hand pair is controlled by the OVR hand controller and the top pair is controlled by VG’s Oculus integration. You can see that they have equivalent tracking quality when the hands are moving in the air with no object interaction. When interaction with the object, OVR hands can’t grasp object (because OVR is overwriting the hand after VG), but VG’s can.

Interaction

I added a VG_Articulation component to my game object, but I could not interact with the object when I play.

There could be four reasons:

- Mesh is not readable.

- The added VG_Articulation component is disabled (unchecked).

- You have in runtime disabled this VG_Articulation component.

- You have in runtime SetObjectSelectionWeight to value <=0.

I runtime changed the VG_Articulation component on an object with different joint type and/or other joint parameters. Why the interaction with this object does not correspond to these changes?

You can only runtime change object articulation by using API function ChangeObjectJoint. See page runtime changes.

When I tried to catch a fast moving object, the hand is following the object for a little which looks strange.

The default interaction type for all objects is TRIGGER_GRASP and Grasp Animation Speed 0.05 (see Global Grasp Interaction Settings) which means that the a grasp moves the hand to the object in 0.05 seconds.

So, when you are trying to catch a fast moving object such as a falling one, the hand will follow the object for 0.05 sec to grab it while falling. There are two quick options to get a better experience:

- Decrease the Grasp Animation Speed to 0.01 (which is the minimum). This makes the grasp happen quicker, while still moving the hand to the object.

- Change the interaction type to JUMP_GRASP for this object and the object will move towards the hand instead. Note that it will now still take the time of the Grasp Animation Speed for the object to interpolate into the hand. These and more interaction types are documented here.

Note that you cannot define the Grasp Animation Speed for a specific object. You can switch the interaction type for a specific object, either by using a VG_Interactable on your object to change it from the start, or by using the API function SetInteractionTypeForObject from your code during runtime.

All my objects are baked but why do I still get unnatural looking grasps?

The unnatrual looking grasps could be caused by you have set the interaction type of this object to be STICKY_HAND. You could have set it by either globally for all objects in Global Grasp Interaction Settings, or on specific object through VG_Interactable component, which overwrite the global settings. To fix it just switch to the commonly used interaction type: TRIGGER_GRASP or JUMP_GRASP. See Grasp Interaction Type for more information.

If the interaction type is not the reason, check if have forgotten to set the baking output grasp db to MyVirtualGrasp -> Grasp DB.

Why on some small tiny objects I sometimes get very bad grasps?

As a backgroud, for tiny objects that need precision grasps, currently VG adopts two alternative dynamic grasp synthesis algorithms with differet levels of grasp quality. When TRIGGER_GRASP interaction type is used on the object, the algorithm that result in lower grasp quality is used because this algorithm create less wrist rotation offset to prevent breaking the immersion. When JUMP_GRASP interaction type is used, the other algorithm that result in higher grasp quality is used because the higher quality is at the cost of big wrist rotation offset. However since object is “jumping” into the hand therefore wrist will not leave its sensor pose thus not breaking the immersion.

So if you notice tiny objects having bad grasps, it might be due to TRIGGER_GRASP interaction type being used on this object. You can switch the interaction type to JUMP_GRASP. To switch interaction type for a specific object, you can either add VG_Interactable on your object to change it from the start, or by using the API function SetInteractionTypeForObject from your code during runtime.

You can read Grasp Interaction Type for more detailed explanation.

Why I can grasp an object with a primary grasp on one hand, but the other hand can not grasp the object at all?

When an object is set to use jump primary grasp as interaction type, it requires there are primary grasp(s) added to this object for both hands. If you only added primary grasp(s) for one hand, then the other hand will not be able to grasp the object. There is console warning message accordingly.

Baking

How am I supposed to import the .obj files (in vg_tmp) into my project?

You are not supposed to import the .obj files in your own scenes (since you have your original models there). The main purpose of the .obj files is to represent the raw mesh data of objects when they shall be sent through the Baking Client to the cloud baking service. You can read more information on Debug Files.

Why are some of my interactable objects not baked?

This can be caused by multiple things:

- Mesh not readable error, or

- The objects are runtime spawned and were not exported correctly in Prepare project step.

Sensor Recording and Replaying

Can I use an avatar with different shaped/sized hands to replay recorded sensor data?

Yes and no. You can always replay recorded sensor data on any avatar’s hands since what is recorded is the sensor data that applies to any hand. However you can not guaranttee creating same interactive behaviors. This is because if hands are of different skeleton or size, its relative pose w.r.t the objects will result in, for example, different grasp synthesized or even no grasp synthesized.

Common Unity Error Messages

“Root actors empty”

[VG] [ObjectSelector] [error] Object keypadbutton0/20174 root actors are empty!

This can be caused by multiple things e.g:

- Static geometry flag is enabled for object, or

- Transform scale is 0 (maybe during animation) on the object or any parent in the hierarchy.

Mesh not readable

[12:22:57] The mesh for screwdriver is not readable. Object cannot be processed.

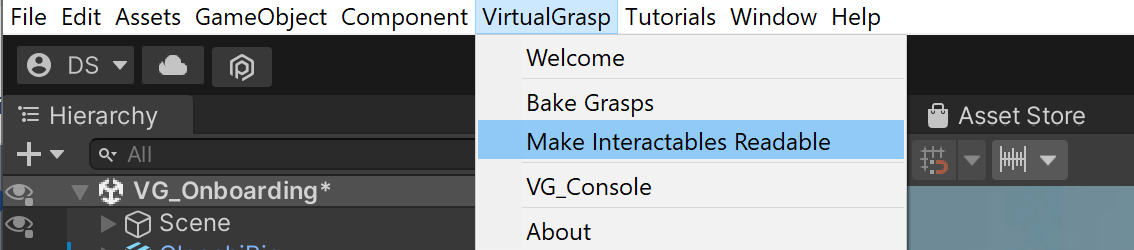

This is because the source of that MeshRenderer have not checked “Read/Write enabled” checkbox in the model inspector. VG has an utility script you could use as shown in below image. Clicking Make interactables readable will check this Read/Write checkbox for all objects that have been marked as interactable.

Others

.NET target framework mismatch when using VS Code

Error: The primary reference "virtualgrasp.CSharp.Unity.NET3.5" could not be resolved because it was built against the ".NETFramework,Version=v4.8" framework.

There are many threads with the same underlying issue of a dependency being compiled against 4.8 while Unitys VSCode plugin is hardcoded at the time of writing to 4.7.1. The culprit is the project file Assembly-CSharp.csproj where this dependency is hardcoded:

<TargetFrameworkVersion>v4.7.1</TargetFrameworkVersion>

The following can be added to that .csproj file to let the IDE ignore these mismatches:

<ResolveAssemblyReferenceIgnoreTargetFrameworkAttributeVersionMismatch>true</ResolveAssemblyReferenceIgnoreTargetFrameworkAttributeVersionMismatch>